Unshackle Yourself from Statistical Significance

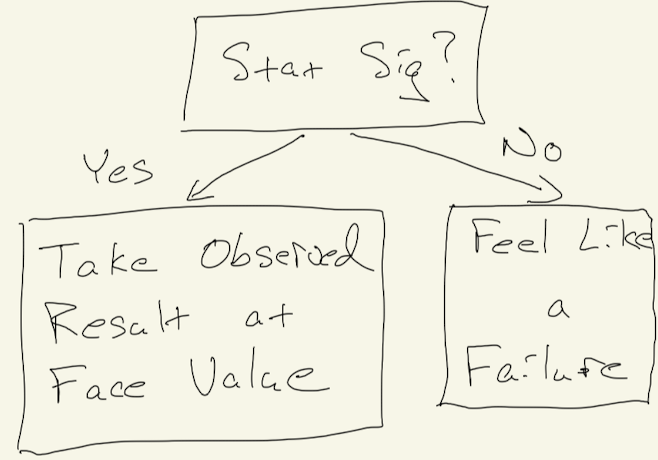

Too often I see people think about A/B testing in terms of the following diagram:

On one side is complete certainty: we know exactly the impact of whatever it is we were testing. On the other side is complete uncertainty: we have no idea what to do so let’s just sweep this under the rug and hope no one notices.

This is a false dichotomy. Just because we have a statistically significant result, does not mean we should believe the observed result is a complete summary of what we have learned. And even without a statistically significant result, we may still have learned quite a bit, perhaps enough to satisfy the business objective motivating the test.

The most valuable thing to come out of an A/B test is a confidence interval, not a little asterisk denoting stat sig. A confidence interval gives a range of effects that are consistent with the data. To be even more precise, I think the points outside of the confidence interval are what are most valuable. These are the possibilities that are effectively ruled out by the data.

Let’s see an example.

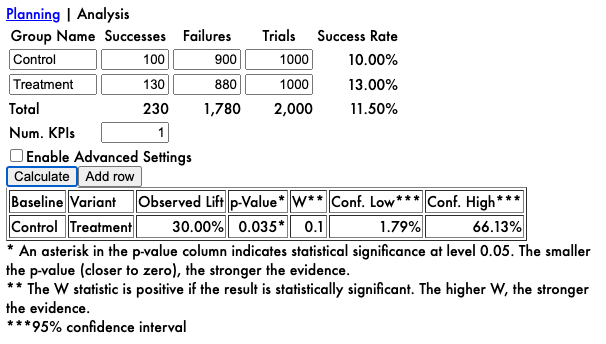

Suppose we are A/B testing a new subject line for an email campaign. We split an audience of 2000 into two experiment groups of 1000 each. We send the legacy subject line to the first (control) experiment group, and the new subject line to the second (treatment) experiment group. In the control group, 100 people open the email; in the treatment group, 130 people open the email.

The observed open rates in the control and treatment groups are 10% and 13%, respectively, with an observed lift of 30% (in relative terms). Using my A/B testing calculator, we calculate that the p-value is 0.035, indicating a statistically significant difference in open rates between the two subject lines.

If we are following the logic in Figure 1, we would now conclude that since the result is statistically significant, we can pop the champagne and tell the CEO we increased the open rate by 30%! This would only be setting ourselves up for later disappointment, as the confidence interval reveals. All this A/B test tells us is that the actual lift is probably between 1.79% and 66.13%. That’s a big range! Imagine telling your CEO, “we think it’s between 2% better and 66% better”. I doubt you’d pop the champagne in that case.

Some of you might be thinking, “well the important thing is to make the right decision, and statistical significance gives us the confidence that we are making the right decision”. I disagree. The important thing is to make a big impact, to “ focus on the wildly important”. Running an A/B test takes time and resources. It isn’t enough for a decision to be the right one, it needs to be a hugely impactful one. Otherwise we’re wasting our energy on micro-optimizations.

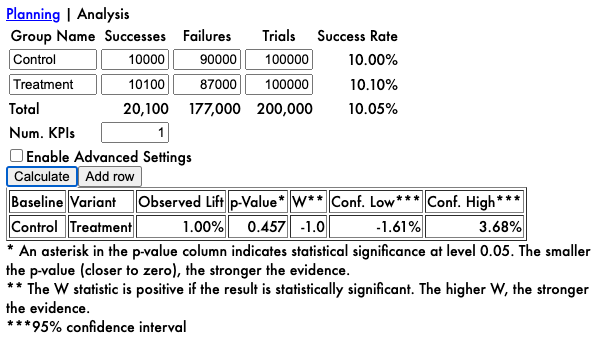

Let’s see an example without a statistically significant result. Now we have 200,000 subscribers in our mailing list. We send the legacy subject line to half and the new one to the other half. This time, 10,000 recipients in the control group open the email, and 10,100 recipients in the treatment group open.

The observed open rates in the control and treatment groups are 10% and 10.1%, respectively, with an observed lift of 1% (in relative terms). As we can see, the p-value is 0.457, which is definitely not statistically significant. But in no sense is this test a failure: the confidence interval tells us the actual lift is somewhere between -1.61% and 3.68%. This tells us that although the new subject line might be worse than the legacy, it isn’t way worse. And even though the new subject line might be better than the legacy, it isn’t way better. Honestly, it just doesn’t matter which option we choose.

There’s a certain existential dread we need to get over when confronting a result like this. We all make dozens if not hundreds or thousands of decisions every day. And the over-analyzers among us might fantasize that each one of these decisions is going to change the course of our lives. But most of the decisions we make just don’t matter all that much. Eating a salad for lunch, or a cheeseburger for lunch, one time, is not going to make or break your diet.

We all have finite mental energy to devote to this decision making. And if we waste that energy on irrelevant decisions, we won’t have any left for the truly important decisions. When we get a result like the second example, we should be relieved, not disappointed. “Oh good, we can just move on”, I would say.

Over time, we can learn what sorts of changes make a big impact, and what don’t. If we run a dozen subject line tests, and none of them make a big impact, just stop running subject line tests! Try something else!

Don’t be a prisoner to statistical significance. A/B testing should serve the business, not the other way around!